Lolllllll burn it down

This is 100% their most consistent position. McDonnell v US from 2016 was also a 9-0 overturned conviction and it was laughably blatant bribery in that case too. Pretty much if there’s not a notary public present for a meeting between the official and the briber where the briber says the magic words “I am giving you this money to do X, this is a bribe” and hands over a sack of cash with a literal $ on it and the official says “I accept this bribe and I am going to do X only because you’re giving me this sack of cash” it’s fair game.

I think Ted Stevens conviction also got overturned LOL

LAW

a rare good decision.

Reagan appointee!

Interesting article: the law that courts frequently argue allows qualified immunity was actually passed in Congress with stronger wording than what got written down in federal law books. Pending “lol fuck you” SCOTUSaments, this could change qualified immunity jurisprudence substantially.

Could this be the first lol fuck you in American history? As in, “Lol fuck you, I’m not writing this part down.”

3/5s says “hi”.

3/5’s was an enormous fuck you, but was it like an “LOL fuck you this law is not gonna work?” I would argue no, it was more of just some straight up empowering racists.

The Section 230 cases against Google & Twitter basically got punted, leaving the status quo in place:

I can’t believe they even heard it. 230 goes poof, SPY goes down 10%+ that day imo. Entire business models disappear.

Yea that would undermine the fabric of the internet lol

I’m coming around to changing my mind on section 230. The internet, and social media in particular, are not a town square, and they aren’t a phone company. They use algorithms designed by people (aka, editorial decisions), to not just host speech but firehose you with whatever content they think you’ll find most engaging.

A telephone call reaches one person, and you have to know their number. A town square can reach dozens or hundreds, but you don’t get a customized town square of people who agree with you (or who otherwise promote your engagement to stay in the town square for hours every day). A town square can’t amplify the loon in one square to meet up with the isolated loons from other squares into their own little fiefdom that reinforces their beliefs.

The downside, of course, is the censorship of the voices of the powerless and those who would speak against the company or its overseers in the government, but man, I am not sure we made a good trade.

this is it

something like craigslist, which just has a bunch of posts in chronological order, would seem to be covered by 230. Even a twitter timeline that is just people you have explicitly opted into could still be 230-friendly assuming there’s no algorithmic bubbling up of particularly “chosen” content.

I appreciate this take, but I don’t know that the analogies are particularly relevant or foundational. Like, the justification for section 230 isn’t necessarily “websites should be treated like the phone company”, because like you said they are obviously different. Rather, it was passed in the 90s specifically to support the growth of the internet, recognizing the ways in which it is different:

Section 230 was developed in response to a pair of lawsuits against online discussion platforms in the early 1990s that resulted in different interpretations of whether the service providers should be treated as publishers or, alternatively, as distributors of content created by their users. Its authors, Representatives Christopher Cox and Ron Wyden, believed interactive computer services should be treated as distributors, not liable for the content they distributed, as a means to protect the growing Internet at the time.

You can imagine reforms or tweaks, but I don’t know about looking at this and thinking we just straight-up got it wrong:

No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.

That is, at its core, what makes the internet work; it’s why this site can exist, or why YouTube can exist.

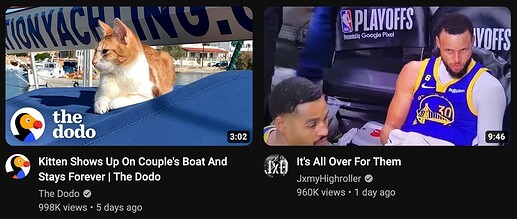

The question of editorial decisions is interesting. These are the first two videos YouTube shows me:

These are videos I’m interested in; if I had to broadly classify the content I watch on YT, it’s a.) cat videos (sometimes dogs too if they’re very good dogs) b.) basketball content (biasing Warriors) c.) history videos mostly from this channel. Has this homepage above already broken Section 230 for trying to show me content it thinks is relevant? I feel like this is very harmless and what we’re really worried about here is if a Ben Shapiro video shows up.

I’m of the opinion that Humanity isn’t ready for the internet, we don’t have the bullshit filters needed to not be sucked in by good stories. Shut it all down.

The law doesn’t do a good job with scale. Every content provider is targeting its content at the intended audience, however, prior to the algorithms this was a very general level of targeting. Today, it’s possible to individually target content to users, with is entirely different from someone putting Cat pictures into a Cat Fancier magazine.

I came to the conclusion a few days ago it just can’t really “work.”

take online news. it’s all click driven, so sensationalist content or misinformation to drive clicks is the capitalist incentive. how does one fix that? well, you could require funding them with tax money, but then that puts politicians in control of media. you could try regulating, but same problem.

the entire system just needs to burn

YouTube isn’t just hosting information generated by other people, though. They are making conscious decisions about what information to highlight and amplify. Book publishers are absolutely liable for the amplification of something someone else wrote. So is the NYT if they publish an e.g. libelous letter to the editor.

Sure, youtube curates what they think you want to see, or more precisely, what will keep you watching more YouTube videos. They don’t feed you Shapiro because Shapiro won’t keep you engaged. Other people, they’re deciding to amplify anti-vaxx, 9/11 truther, ISIS, etc. videos because it keeps those people engaged.

The argument not to hold a carrier liable for publishing content made by 3rd parties is if they are just passive hosts that aren’t making decisions about what gets posted and what gets amplified, in contrast to a book publisher or the NYT which is absolutely making decisions about what they publish and what they amplify. E.g., telephone companies aren’t making decisions about who’s saying what on their phone networks. They’re just passive carriers. But do you think people would treat them differently if they had algorithms that fed you calls from ISIS a few times per day? Sure, a lot of people would just hang up, but they’d also be able to reach a few more nut cases than they otherwise would have without that specific power to amplify ISIS to other people who otherwise would have gone unreached but for the amplification decision.

The notion that all these websites would cease to exist but for section 230 is corporate propaganda. The solution is obvious: if they are going to amplify certain messages due to them generating increased engagement, then they cannot do so in an amoral fashion that amplifies any message that generates increased engagement, but instead they must moderate the messages being amplified. This website is moderated, and it could exist in a world where websites were held liable for hosting or amplifying illegal content. There is potentially an issue of malicious nuisance lawsuits filed against small time websites to make the cost of defense against the lawsuits greater than the value the website provides, but that’s potentially fixable issue with things like loser has to pay legal bills. That’s not an issue to multibillion dollar corporations who are whining about this because they want to save a few milly a year in content moderation salaries, not because they’d forgo billions in revenue because they have to moderate content.

I don’t think it’s possible to reform or tweak this stuff in any meaningful way. You could require high level publishing of the suggestion algorithm but what’s the point, we already know how it works.

The problem at its core of course is that content that invokes fear and hatred gets the most engagement. The end. How do you regulate that?